Introduction to Neural Networks

By Rajesh Kumar Reddy Avula

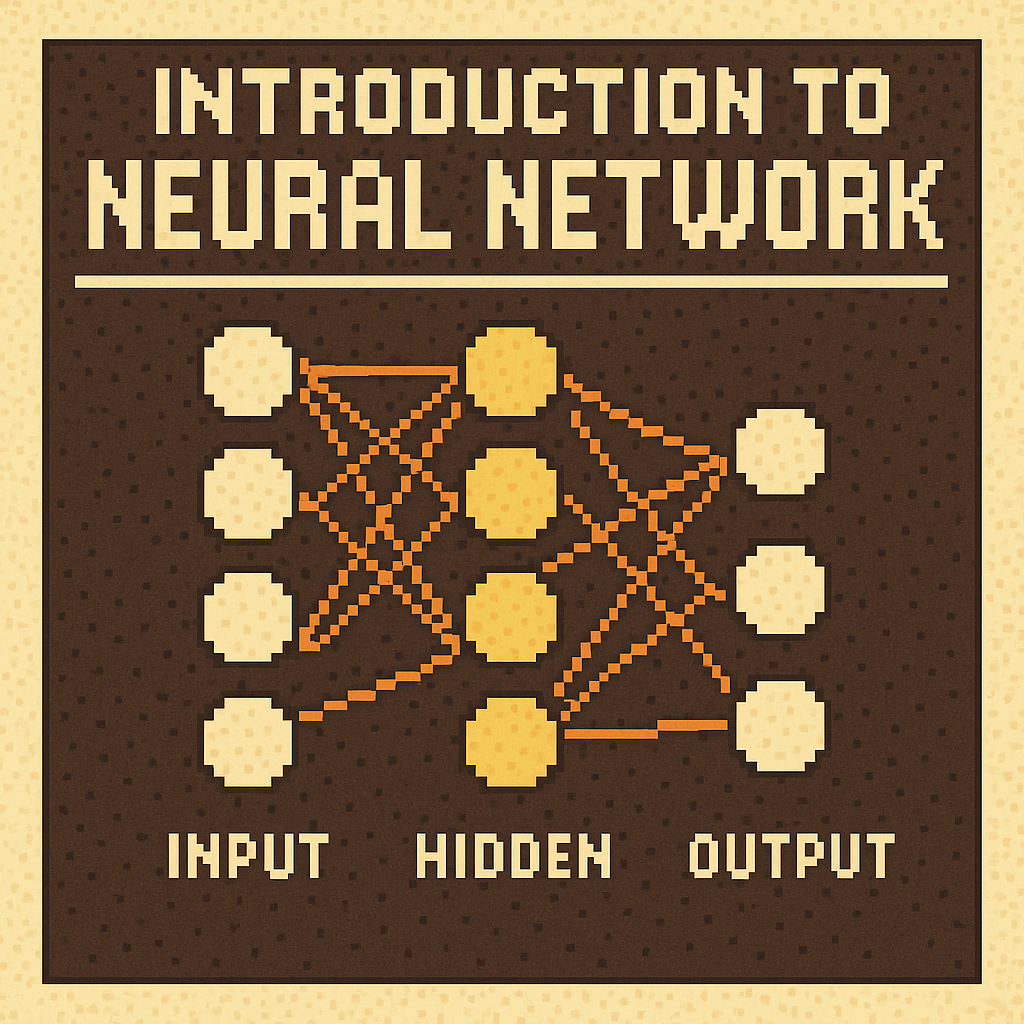

Neural networks form the foundation of modern machine learning systems—powering innovations in vision, speech, recommendation engines, and beyond. Inspired by the architecture of the human brain, these models consist of layers of interconnected nodes that learn patterns in data and make increasingly accurate predictions through iterative training. Learn more about neural networks here.

Key Insights on Neural Networks

- Neural networks use artificial neurons arranged in structured layers to process information.

- They learn by adjusting weights using backpropagation and optimization techniques like gradient descent.

- Common architectures include feedforward, convolutional, and recurrent neural networks.

- PyTorch provides dynamic computational graphs that make neural network development intuitive and flexible.

Exploring Neural Networks Further

A neural network includes an input layer, one or more hidden layers, and an output layer. Each layer applies activation functions like ReLU or sigmoid to learn and transform data. PyTorch's torch.nn module makes building these architectures straightforward with pre-built layers and activation functions.

During training, neural networks minimize prediction errors through iterative weight updates based on loss calculations. PyTorch's automatic differentiation system handles backpropagation seamlessly, allowing developers to focus on model architecture rather than gradient computation. Their flexibility allows for powerful pattern recognition across a range of domains—from image classification to language translation.

PyTorch for Neural Network Development

PyTorch has become the go-to framework for neural network research and production deployment. Its dynamic nature allows for easy debugging and experimentation, while its comprehensive ecosystem includes tools for computer vision (torchvision), natural language processing (torchtext), and audio processing (torchaudio). Get started with PyTorch by exploring their comprehensive documentation.

Practical Applications in the Databricks Ecosystem

- Train neural networks using PyTorch or TensorFlow in Databricks notebooks with GPU acceleration.

- Deploy deep learning models at scale using MLflow for tracking and automation.

- Leverage PyTorch Lightning for structured training workflows and distributed computing.

- Support a variety of real-world use cases like anomaly detection, computer vision, and natural language understanding.

Why Neural Networks Matter

- Scalable Learning: Neural networks adapt to large datasets and complex problems.

- Generalization Power: With enough data, they can model virtually any function.

- Automation Ready: Neural networks reduce the need for manual feature engineering.

- Framework Flexibility: PyTorch's research-friendly design accelerates innovation and prototyping.

Neural networks are more than a research tool—they're production-grade intelligence engines, fueling today's AI-powered applications. PyTorch continues to democratize access to these powerful tools, making neural network development accessible to researchers and practitioners alike.

Go back Home